llm-ification

Understanding the LLM-ification of CHI: Unpacking the Impact of LLMs at CHI through a Systematic Literature Review

Welcome to the github repository for the paper “Understanding the LLM-ification of CHI: Unpacking the Impact of LLMs at CHI through a Systematic Literature Review” 📄 [Preprint].

🌀 Abstract

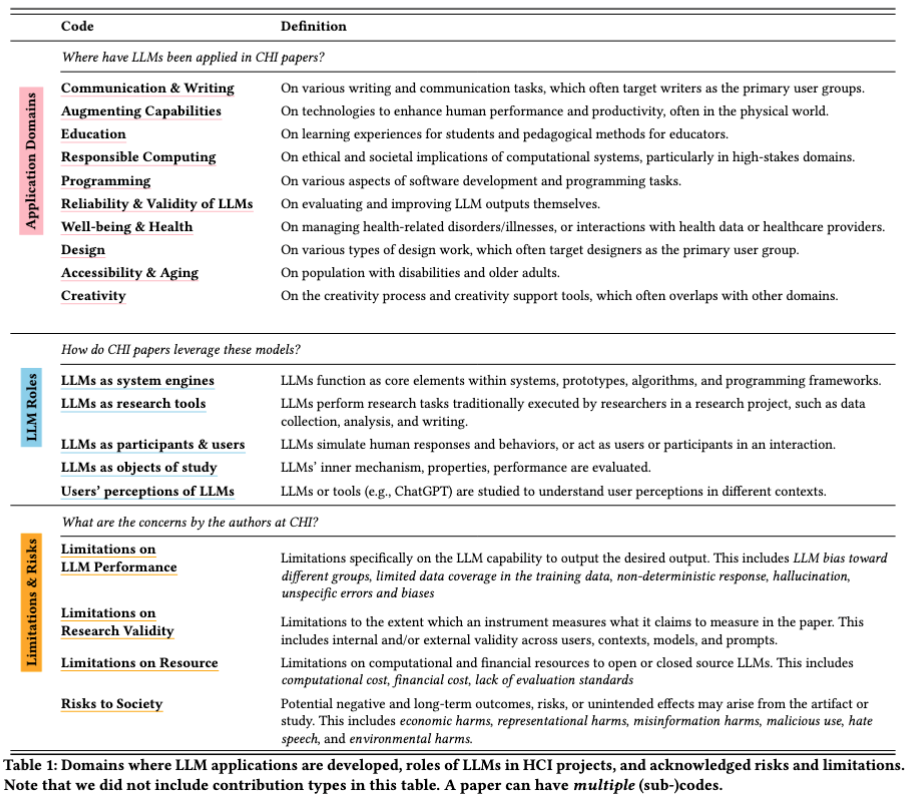

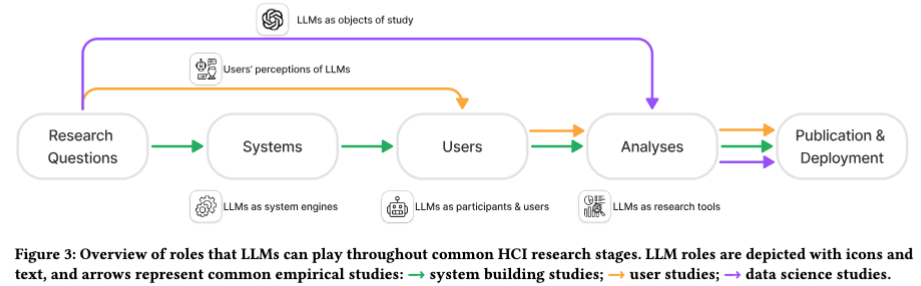

Large language models (LLMs) have been positioned to revolutionize HCI, by reshaping not only the interfaces, design patterns, and sociotechnical systems that we study, but also the research practices we use. To-date, however, there has been little understanding of LLMs’ uptake in HCI. We address this gap via a systematic literature review of 153 CHI papers from 2020-24 that engage with LLMs. We taxonomize: (1) domains where LLMs are applied; (2) roles of LLMs in HCI projects; (3) contribution types; and (4) acknowledged limitations and risks. We find LLM work in 10 diverse domains, primarily via empirical and artifact contributions. Authors use LLMs in five distinct roles, including as research tools or simulated users. Still, authors often raise validity and reproducibility concerns, and overwhelmingly study closed models. We outline opportunities to improve HCI research with and on LLMs, and provide guiding questions for researchers to consider the validity and appropriateness of LLM-related work.

🏺 Taxonomy

📋 Application Domains, LLM Roles, Limitations & Risks 📋

🎭 LLM Roles throughout common HCI research stages 🎭

🌐 Paper Collections

Our analysis will evolve as we collect and analyze papers at CHI and more publication venues. If we miss your papers, please feel free to submit a pull request, open an issue, or ✉️ email us! We’d love to include your work and together we can make this collection more comprehensive.

Citation

If you find this useful in your research, please consider citing this paper:

@article{pang2025understanding,

title={Understanding the LLM-ification of CHI: Unpacking the Impact of LLMs at CHI through a Systematic Literature Review},

author={Rock Yuren Pang and Hope Schroeder and Kynnedy Simone Smith and Solon Barocas and Ziang Xiao and Emily Tseng and Danielle Bragg},

journal={arXiv preprint arXiv:2501.12557},

year={2025},

url={https://arxiv.org/abs/2501.12557}

}

Acknowledgement

This work wouldn’t be possible without the research inspiration from my advisor at UW, Katharina Reinecke. We thank the anonymous reviewers for their valuable feedback. We also thank Jenn Wortman Vaughan, Kevin Feng, Mohammed Alsobay, Sachita Nishal, Harsh Kumar, Shivani Kapania, Katelyn Mei, Enhao Zhang, Sandy Kaplan and many more friends and mentors at the University of Washington and Microsoft Research for their research inspirations, fun conversations, and helpful suggestions.

Contact

I’m looking forward to understanding this line of work beyond CHI. If you have feedback for our paper, or are interested in chatting or collaborating, please don’t hesitate to contact: Rock Yuren Pang <ypang2@cs.washington.edu>